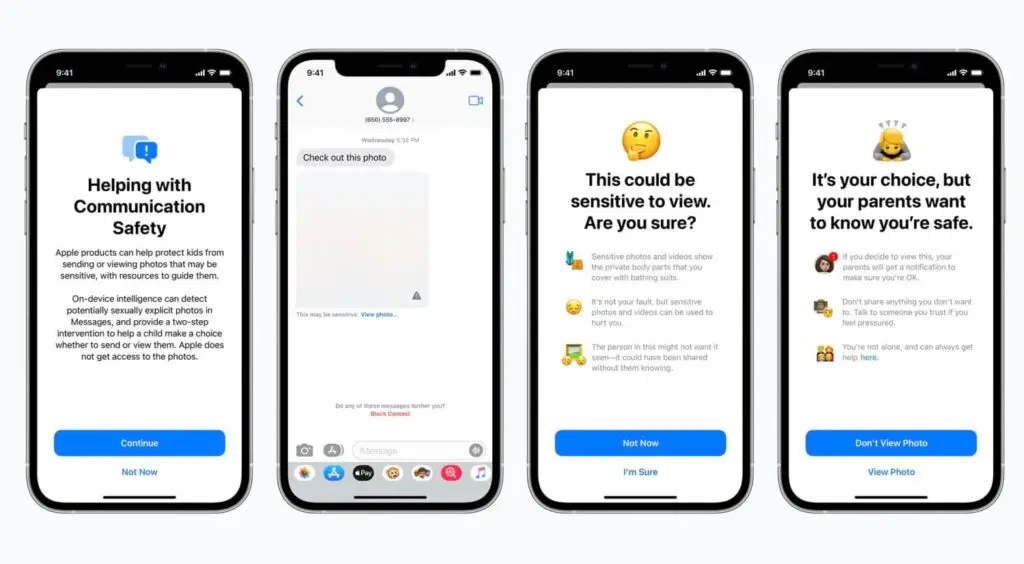

Apple Scans and Reviews your pictures and messages

Apple is now hunting for signs of child abuse in messages and photos.

Apple is violating iPhone privacy to stop child abuse. The company plans to scan user photos using evidence of an algorithm for proof of child abuse.

The algorithm sends the image to a human reviewer if it finds one. The idea that Apple employees are watching legitimate photos of a user’s children is fundamentally worrying.

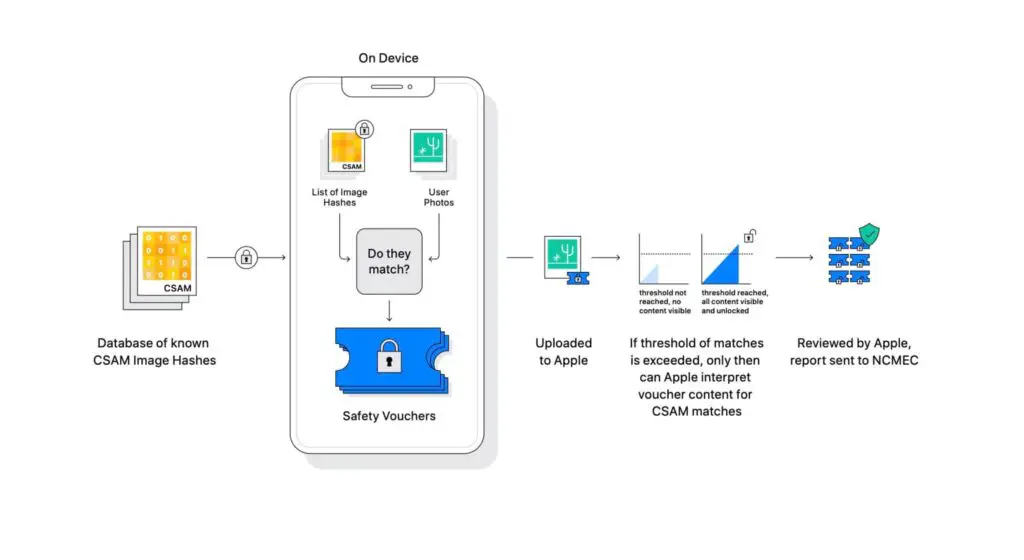

Shortly after the article was published, Apple confirmed that software hunts for child abuse. The “Extended Protection of Children” blog post outlined plans to curb child sexual abuse material (CSAM).

As part of these plans, Apple introduces new iOS and iPadOS that “allows Apple to detect known CSAM images stored in iCloud Photos.” Essentially, scanning the device is done for all media stored in iCloud Photos. If the software finds an image suspicious, it sends it to Apple, which decrypts the appearance and views it. If you find that the content is, in fact, illegal, you will notify the authorities.

Apple claims that “there is a chance of one in a billion a year incorrectly marking a particular account.”